Berk Özdemir

Read Thesis

hello@berkozdemir.com

linktr.ee/princesscamel

www.berkozdemir.com/

www.princesscamel.com/

contact@princesscamel.com

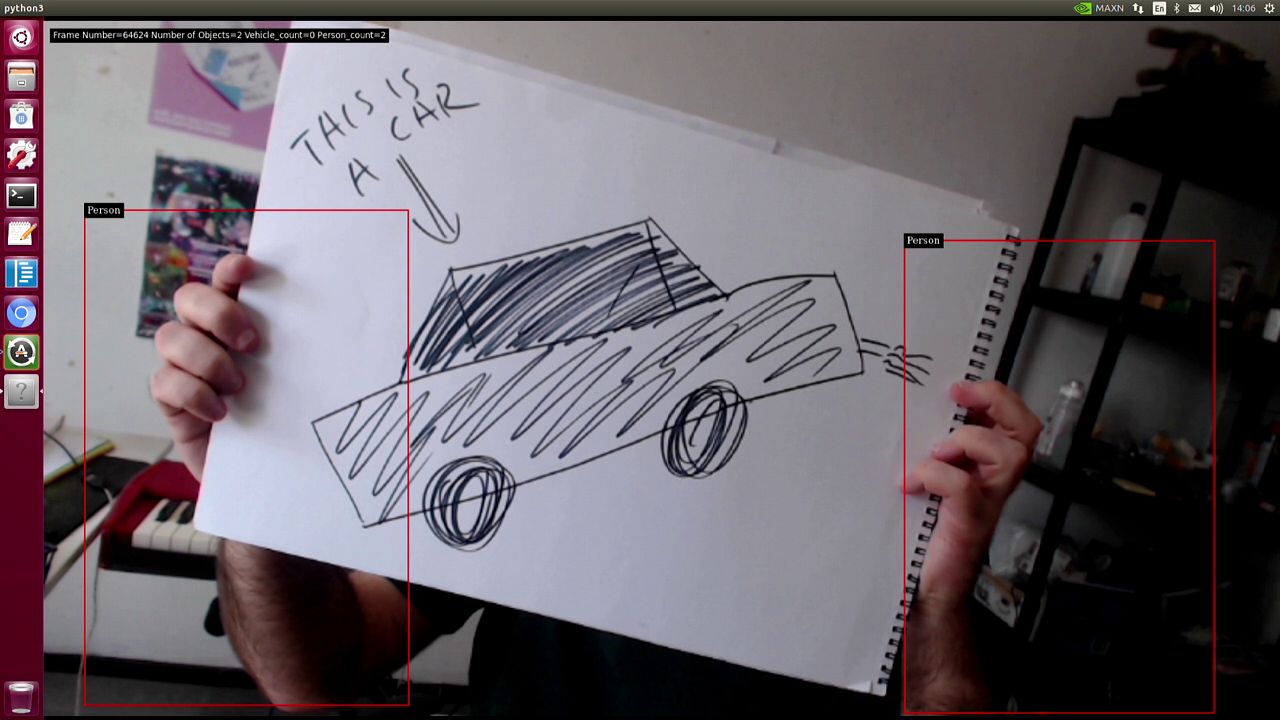

With this project, I am exploring the possibilities of using a real time object detection system as an interactive performative instrument.

I am using a single board computer called Jetson Nano, which runs DeepStream SDK; which is a realtime intelligent image analysis framework for survelliance, analysis, security applications or DIY projects. DeepStream can be used with realtime camera input, or video playback. The default model comes with DeepStream can detect people, cars, bicycles and roadsigns.

What I did with it was modifying some of the Python code examples to send data of each detected object to other computers in the network using OSC (Open Sound Control) protocol, which allows me to control any software that accepts OSC messages.

Obviously it is up to one's imagination to how to use this data; they can be mapped as keyboard/mouse/gamepad inputs to control softwares such as videogames, be used to generate/control visuals and sounds, and so on.

I use Supercollider to parse incoming OSC data from DeepStream, and map this data to parameters of synths I create. Each number data DeepStream sends (x-y-width-height each object, frame number, number of people-cars etc.) are stored in control busses in real time, which can be processed and mapped to anything I created in Supercollider on the fly. This allows me to turn the movements of people and vehicles grabbed by my camera to sounds and noise, and I mainly am building performance and composition setups around that.

Github link: https://github.com/vortextemporum/DeepStream-Loves-Osc

THESIS

RE-EXPLORING VIDEOGAMES FOR AUDIOVISUAL PERFORMANCE AND COMPOSITION

The thesis aims to give an introduction on how videogames can be approached beyond gaming and used as a raw material in creative artistic practice, by theorising game elements such as sound, space, gameplay and form structure in musical composition and theory terms.